ImageSrc: Enisa

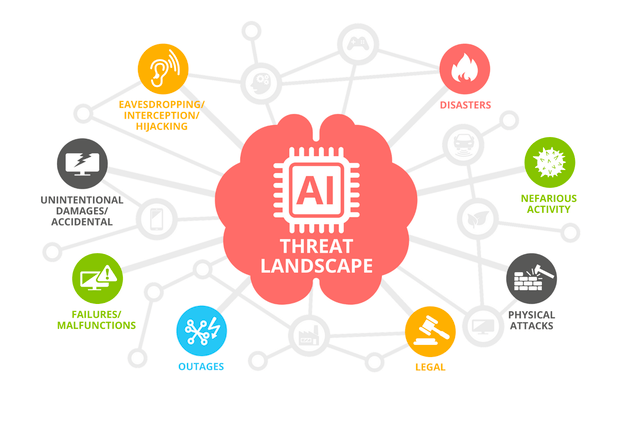

AI has been around for a while but the recent surge and adoption of generative AI solutions such as ChatGPT, BARD and others are now a hotbed for cybersecurity actors and threats. In this post lets explore some of the security challenges we can expect in the age of Generative AI and AI in general.

Here is an excellent post on how a researcher was able to use ChatGPT to write ransomware

Common risks we can expect to see due to increased adoption of AI.

- Model Poisoning – Model poisoning refers to subtly altering the training data of an AI model, so the model learns incorrect patterns or behaviours. This can be particularly insidious because the model may appear to function normally while making compromised decisions

- Model Injection – Model injection attacks involve injecting malicious models into AI systems. This can happen if an attacker gains access to the system where they can replace a legitimate model with a compromised one, or if they can manipulate the system into accepting a malicious model during an update or integration process

- Social Engineering – AI can be used to automate and enhance social engineering attacks, such as spear phishing, by analysing large amounts of data to create highly personalized and convincing scams

- Network Attacks – Using AI to identify vulnerabilities in networks and systems and to optimize network attacks like DDoS or man-in-the-middle attacks

- Attack Optimization – Automated attack optimization uses AI to optimize cyber-attacks. AI algorithms can analyse defense mechanisms and adapt attacks in real-time, making them more efficient and harder to detect

- Phishing – Expect more advanced and sophisticated emails that exploit use social engineering more effectively than ever before

- Over-reliance on AI – while AI will advance detection of attacks and attack patterns and shorten the dwell time for incidents there the risk of reduced human oversight which may result in missed detections and threats.